Gurobi’s powerful MIP algorithm allows you to add complexity to your model to better represent the real world, and still solve your model within the available time.

The Gurobi library consists of over 10,000 commercial models sourced from academia and our industry prospects & customers. We test each optimization we make to the solver, so we know that each new version of Gurobi is delivering meaningful, powerful performance improvements to our users.

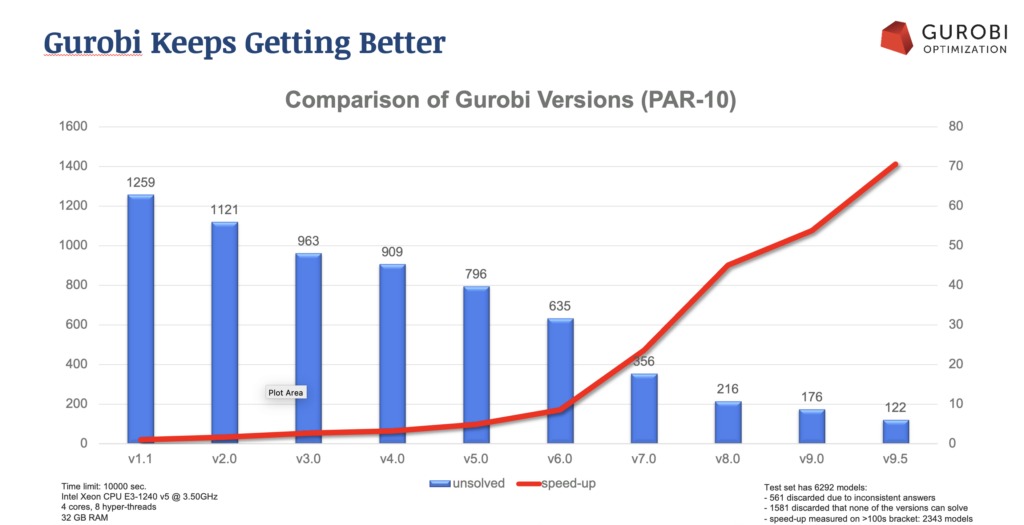

See how Gurobi performance has grown with each new release.

Benchmarking is an important aspect of evaluating a solver, and public benchmarks can certainly provide a very useful perspective in your evaluation process. When looking at any benchmark test, there are some critical points to consider in order to truly understand, evaluate, and select which solver is best for you.

We firmly believe that our software and our library are the most robust on the market—and we consistently win almost every major public benchmark test. Unfortunately, if we test competing solvers against our library, competitor licensing restrictions prevent us from publishing the results.

Benchmark results can fluctuate over time as companies introduce new versions of their solvers. With few exceptions, the Gurobi Optimizer consistently wins in public benchmark test results, showing the:

Gurobi keeps getting better and better with each version.

Because benchmark tests are usually run using a solver’s default settings, it’s important to understand what those defaults are. But because defaults are chosen to provide the best overall performance across a range of models, they’re often not optimized for a particular model.

Understand benchmark tests in context of their defaults. Use them as a starting point, and ultimately test solvers against your own models.

Some benchmark tests can be misleading – intentionally or not. If a company cherry-picks models and tunes their solver for that subset of models, they may be able to claim superiority over recognized industry-leading solvers. With a deeper look, you may find that the selected model is only academic in nature and not reflective of the real world, or that tuning the opposing solver would result in a much better performance than indicated by the test parameters.

Make sure the results you’re seeing aren’t being manipulated or misconstrued to appear more impressive than they are.

It’s important to determine whether a test measures something that is meaningful to you in practice. A test that measures the time required to produce poor-quality solutions isn’t relevant if your application requires high-quality solutions.

Evaluate the benchmark test and the solver’s performance based on the problems and models you need to solve.

When testing a solver, you need the opportunity to tune performance to your specific models. Gurobi includes over 100 parameters to adjust, and an Automatic Tuning Tool that intelligently explores parameter settings and returns with advice on specific settings you can use to optimize the solver for your model(s).

Using default settings, Gurobi has the fastest out-of-the-box performance. By using the Automatic Tuning Tool to tune the parameters for each individual model, mean performance across the models increases by 68%. Our distributed tuning capabilities show a 152% performance improvement in the same amount of tuning time.

We make it easy for students, faculty, and researchers to work with mathematical optimization.

When you face complex optimization challenges, you can trust our Gurobi Alliance partners for expert services.

Our global team of helpful, PhD-level experts are here to support you—with responses in hours, not days.

GUROBI NEWSLETTER

Latest news and releases

Privacy Policy | © Gurobi Optimization, LLC. All Rights Reserved.

| Cookie | Duration | Description |

|---|---|---|

| cookielawinfo-checkbox-advertisement | 1 year | Set by the GDPR Cookie Consent plugin, this cookie is used to record the user consent for the cookies in the "Advertisement" category . |

| cookielawinfo-checkbox-analytics | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookie is used to store the user consent for the cookies in the category "Analytics". |

| cookielawinfo-checkbox-functional | 11 months | The cookie is set by GDPR cookie consent to record the user consent for the cookies in the category "Functional". |

| cookielawinfo-checkbox-necessary | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookies is used to store the user consent for the cookies in the category "Necessary". |

| cookielawinfo-checkbox-others | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookie is used to store the user consent for the cookies in the category "Other. |

| cookielawinfo-checkbox-performance | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookie is used to store the user consent for the cookies in the category "Performance". |

| CookieLawInfoConsent | 1 year | Records the default button state of the corresponding category & the status of CCPA. It works only in coordination with the primary cookie. |

| elementor | never | This cookie is used by the website's WordPress theme. It allows the website owner to implement or change the website's content in real-time. |

| viewed_cookie_policy | 11 months | The cookie is set by the GDPR Cookie Consent plugin and is used to store whether or not user has consented to the use of cookies. It does not store any personal data. |

| Cookie | Duration | Description |

|---|---|---|

| __cf_bm | 30 minutes | This cookie, set by Cloudflare, is used to support Cloudflare Bot Management. |

| Cookie | Duration | Description |

|---|---|---|

| CONSENT | 2 years | YouTube sets this cookie via embedded youtube-videos and registers anonymous statistical data. |

| Cookie | Duration | Description |

|---|---|---|

| VISITOR_INFO1_LIVE | 5 months 27 days | A cookie set by YouTube to measure bandwidth that determines whether the user gets the new or old player interface. |

| YSC | session | YSC cookie is set by Youtube and is used to track the views of embedded videos on Youtube pages. |